Why Our Content Marketing Agency Won’t Adopt ChatGPT or Bing AI

Updated on February 27, 2023.

If you’ve clicked on this post, you already know: generative AI tools have taken over the marketing world.

Everywhere I turn, my browser window is covered in discussions of ChatGPT, Bard, or Bing AI. Here’s the current home page on Search Engine Land with 3 out of 5 articles explicitly referencing AI:

Screenshot of the top section of Search Engine Land as seen on Feb 21, 2023.

But if you’ve seen any of my posts on social media lately (and as you can infer from this article’s title), you know that I am not a fan of AI writing tools.

In fact, we’ve decided to not use generative AI in any of our client work. And this post (unless otherwise stated in specific sections) was produced entirely by humans.

We are not alone in our skepticism towards generative AI and its supposed impact on content marketing. Ross Hudgens from Siege Media published a “Content Marketing Trends and Insights” report where their team found that companies who do not use AI “report higher performance” from their content marketing.

Our team at Kalyna Marketing currently maintains the following perspective:

AI writing tools like ChatGPT and Bing AI cannot handle most content marketing tasks because the technology these tools are built on fails to create new ideas, understand meaning, or distinguish fact from fiction.

We Wish The Hype Was Real

Before Bing AI’s bizarre, aggressive responses to users or CNET’s spectacular pause on publishing AI-generated content because of factual mistakes, there was disappointment.

Sad, bitter, palpable disappointment.

I wanted the hype for generative AI to be real. More than most, I was so excited for these tools to help me with my marketing work. You see, I love technology and trying out software. What I don’t love is the process of writing a lot of content on a short deadline imposed by someone other than myself. I am the perfect user for ChatGPT.

But ChatGPT failed to win my heart and prove its value to my work, just as its predecessors failed before.

Personal Disappointment with Generative AI

After many hours, weeks, and months of fiddling with generative AI tools — I did not type a single prompt into a GPT-3 based tool for nearly a month.

How can that be? I run a content marketing agency, and everyone in my field is obsessed with OpenAI, Google, and Microsoft’s recent arms race to develop the perfect AI content generator and change the landscape of the internet as we know it. In the past month I’ve talked about AI on all of my platforms and even appeared on a live panel titled “How AI Can Make Us Better B2B Marketers”.

These AI tools, impressive as they might be, did not deliver on their promises to help with our work. I’ve tried every prompt under the sun for tasks varying from brainstorming, title generation, and topic suggestions, to writing, editing, and summarizing. Most of the time, I would spend 30+ minutes fiddling with a single prompt in the hopes of saving 10-15 minutes on a task that I didn’t feel like doing. Not particularly efficient, is it?

I wanted to be fair. In fact, I opened both ChatGPT and Notion AI again while working on this piece. And I’m ready to admit: Notion AI did help me with the writing process. I took the full text of my earlier piece on AI from Substack, pasted it into the AI, and asked it to turn my main points into an outline. That outline then helped me organize my thoughts and begin working on adding in new notes and research for this piece.

Did Notion AI save me time? Yes, about 10 minutes.

It was a trivial task, but it did speed up a small aspect of the process and helped me avoid the overwhelming anxiety of starting at a blank page. But none of this would have worked if I hadn’t already produced a full piece on the same topic. Notion AI simply took out quotes from my existing work and wrapped them in a pretty bow.

I tried to involve the AI further. I really wanted ChatGPT and Notion AI to help me figure out the best points from my previous piece. I tried many prompt variations and pasted my Substack essay, LinkedIn posts, video transcripts, and other notes. But the AI couldn’t even find one key idea to help me with my thesis.

After failing to disprove my own argument, I needed to recruit a human writer for feedback and help. The piece you’re reading right now took 5+ hours of input from Kaashif Hajee on top of all the thinking, writing, and researching that I had already done. All of this writing was produced with stinky human blood, sweat, and tears.

Not Everyone Wants AI Features

Since ChatGPT blew up the internet, we’ve seen multiple tech companies clumsily push out AI-based features to their users.

As far as I’ve seen, many of these implementations have been been lackluster and frustrating loyal users more than helping them. Both SEO and journalism communities first reacted to Bing AI with enthusiasm, but after seeing how error-prone the product was, many experts switched to expressing skepticism. Users, on the other hand, seem mostly entertained by the weirdness of Bing’s chatbot, “Sydney”, but I haven’t seen anybody talk about the AI improving their search experience.

The reactions to Notion AI have also been a mixed bag. On February 22, Notion announced that their AI features are no longer in private alpha testing, jumping immediately to full paid implementation. Their CEO, Ivan Zhao, closes out the announcement with the following words:

“Perhaps the most exciting part of today’s launch is that for Notion AI, this is only the beginning. Using your feedback as our guiding light, we’ll continue to improve the experience and introduce new AI capabilities for you.”

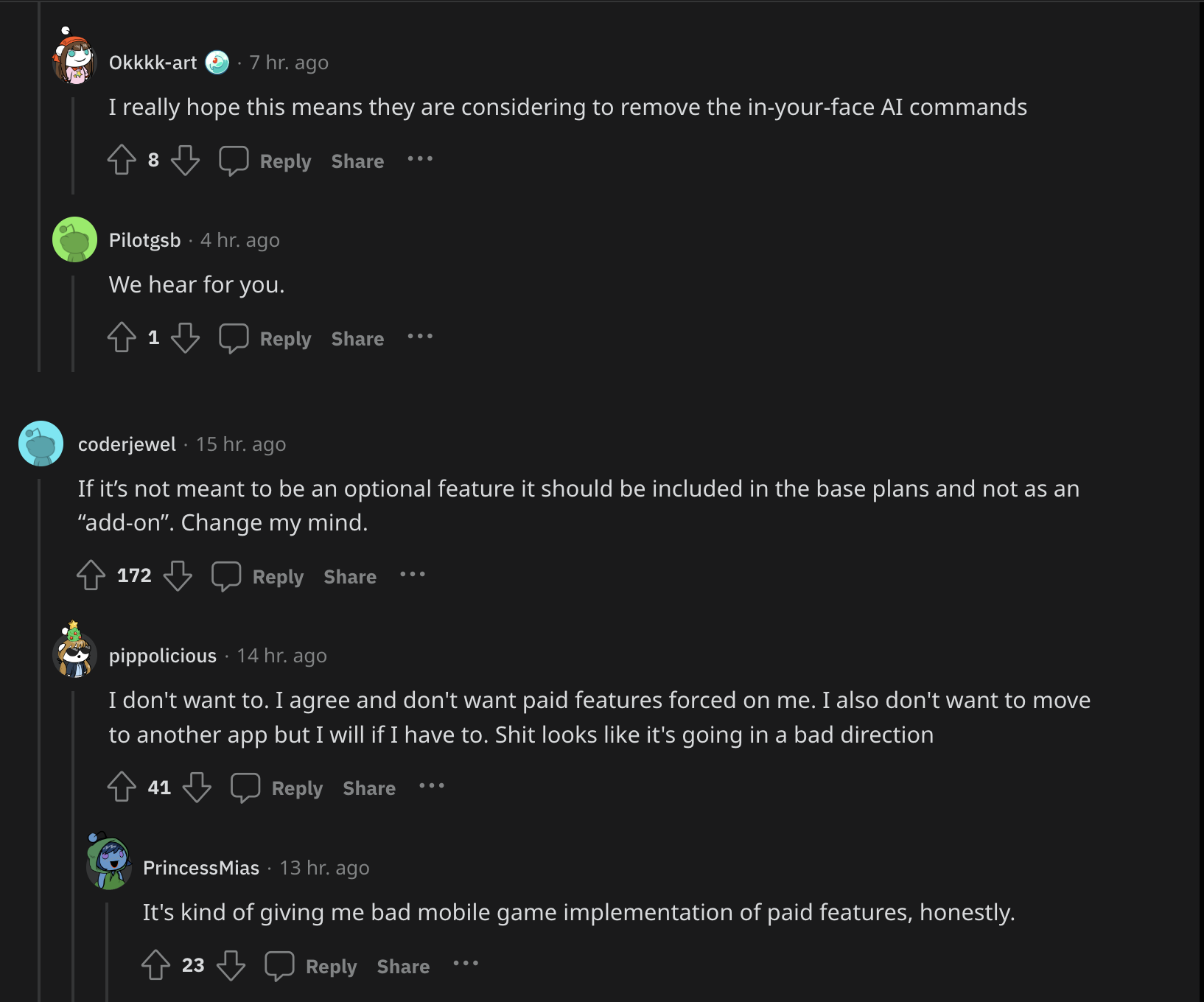

His enthusiasm rings hollow, however, given that Reddit is currently discussing the confusingly tone-deaf emails that Notion’s users have been getting from customer support when simply asking to turn off AI features from their workspace.

As a long-time fan of Notion, I am disappointed and stressed seeing the words “Notion Al isn't intended to be an optional feature, so it's not possible for you to turn it off in settings.” A feature like AI generated text isn’t an essential aspect of Notion’s note-taking and project management capabilities. This aggressive push by Notion’s team is even more concerning when you consider that Notion AI is a paid add-on, costing $10 a month per user.

This single feature is more expensive than Notion’s entire team plan ($8 / user / month when billed annually) and 2/3 of the price of Notion’s business plan ($15 / user / month billed annually)!

This feature was promoted as a slow, optional test to see how the users would respond. Yet a vocal subset of Notion’s users are legitimately unhappy (including me), and Notion has yet to change course on pushing their new AI features with no option to remove them.

Stop Falling for Magic Tricks: AI’s Illusions

I was angry when watching the video essayist Tom Scott’s recent piece about AI:

In that video, Tom brings up a wonderful use case for ChatGPT. He used the AI to create and implement a script for labeling an archive of 100,000 emails using his preferred categorization system. The implementation worked, saving him countless hours and getting rid of an annoying problem that was plaguing his inbox for years.

I love that use case. It’s perfect for an AI that’s based on a Large Language Model (LLM). Pick up the patterns within emails, understand the categories proposed by the user, and write a script that would automate that exact task in Gmail. I think that any of you suffering from the same issue should probably try out a ChatGPT solution too.

But what made me angry was Tom Scott’s conclusion. At the end, he describes the fear he felt while interacting with ChatGPT. And he says this: “after years of being fairly steady and comfortable, my world is about to change”.

These types of hyperbolic predictions about generative AI changing fundamental aspects of our existence make my blood boil. I don’t know what’s going to happen 50 or even 20 years from now. But I do know that the AI technology we have today, in February of 2023, is NOT life- or career-changing for most people, including marketing professionals.

The text you get when interacting with any LLM-based AI tool is not a result of a human-level intelligence speaking back at you. What you’re witnessing, scary as it seems, is simply an illusion. AI is just smoke and mirrors, reflecting your own thoughts back at you.

First of all, the current tools you’re interacting with weren’t made without massive, painful, and underpaid human intervention. For instance, a Time investigation found that for ChatGPT OpenAI outsourced moderation to Kenyan laborers earning less than $2 per hour.

Second, as described by Ben Schmidt in “You've never talked to a language model”:

Any LLM-based AI “is, effectively, trying to predict both sides of the conversation as it goes on. It’s only allowed to actually generate the text for the “AI participant,” not for the human; but that doesn’t mean that it is the AI participant in any meaningful way. [..] The only thing the model can do is to try to predict what the participant in the conversation will do next.”

The AI is responding back to you, using the massive dataset it was trained on to pick up on patterns that match what you, the human user, expect to see in its response. It’s simply a very fancy and powerful autocomplete. It’s not intelligent. It’s not sentient. And it’s often not particularly useful.

ChatGPT and Bing AI are very impressive magic tricks. As Dan Sinker described in a recent essay, the AI is using misdirection to guide your attention away from the mechanics of the trick itself. Instead, you’re deluded into thinking that what you see is literal magic:

“When it all comes together just right, it's hard not to have a sense that you're working with something more than a simple computer program. It's a great trick, not just because it's technically impressive but because it makes users feel like they're witnessing something truly astounding.”

But even the most impressive magic show is simply a performance contained to narrow execution and timing. If you look closely enough, you’ll notice string and wires holding up the floating set.

Why Generative AI Isn’t Good Enough For Content Marketing

Let’s move on to how these AI models fit into marketing work. Since our work is mostly focused on content, I can’t speak much to other marketing specialties, but I do believe that a lot of these points will still apply.

What Makes Good Content?

If ChatGPT is supposed to help you with content marketing, it should assist in achieving the same standards as a human expert. For that, we need to agree on a common definition of “quality” as it applies to content marketing.

What is content supposed to do? As any other marketing materials, good content has two key jobs:

Deliver value to the person engaging with your content. Somebody is consuming whatever you made. They came there for a reason. What do they want to get out of it and are you actually delivering that to them?

Help you achieve your own goals as the creator. Whether you’re a solo creator or representing a brand, you have goals. Most likely, you’re trying to get attention and increase your revenue. But no matter what you set as your objective, content is only "good" when it helps you achieve it.

Your content needs to keep the promises made both to your audience and to you as the creator behind that piece of content. Quality is not about format, style, or even your ideas.

Quality means that your content delivers value to your audience and achieves your organization’s business objectives. No more, no less.

Our graphic illustrating some of the key findings from Demand Gen’s “2022 B2B Buyer Behavior Survey”.

Most of the time, you want readers engaging with your content to either share it with their network or to enter your sales pipeline, such as by contacting your sales team. As we can see from Demand Gen's “2022 B2B Buyer Behavior Survey”, effective B2B content needs to:

Back up claims with research

Incorporate “shareable stats and insights”

Tell “a strong story” to peers, internal stakeholders, and buying committees.

The Differentiation Dilemma

If you want results, you need to differentiate your content from everything produced before you.

Differentiation is an obvious business advantage. As Joe Zappa, founder of Sharp Pen Media told us:

“Excellent marketing writing very specifically lays out the singular expertise of the company or individuals putting it out or captures what they, and they alone, offer to their target audience”.

Without properly differentiating your point of view “you can't build a brand or stand out in the crowded online marketplace”. If everyone already sounds the same, adding another voice to the chorus won’t get you attention.

But incorporating a unique perspective into your content is extremely hard. There’s a reason why Joe and I can both run businesses providing content marketing services. Many marketing teams and founders do not have the bandwidth or expertise to consistently produce effective content.

That’s why ChatGPT is so compelling: think of all the money and time you could save if you no longer needed to find, evaluate, and budget for expensive marketing consultants and agencies. But this dream rests on an assumption that dumping information = good writing. And that’s simply not true.

I used to work as a writing instructor in college. My students, all incredibly smart and talented individuals at a very selective school, were terrible writers. I would constantly ask my students "so what?" and push them to actually articulate their opinions instead of parroting quotes from their source material back at me.

If I learned anything from those 200+ hours of teaching writing it would be this: understanding the words you read doesn’t mean you understand the ideas behind them.

When you push a human writer to articulate why they think the way they do, that’s when the real magic happens. AI can never capture even a tiny speck of that elusive fairy dust, because AI cannot have a motive behind its writing.

It's not enough to simply repeat what others have already said before you. Good writing does at least some of the following:

Adds something new

Contributes a unique perspective

Introduces a different point of comparison

Analyzes past information using a new framework.

Good content marketing is a lot like good academic writing. If you want your post to get attention, you need to do something that your competitors haven't.

Both Kalyna Marketing and Joe’s content studio are staffed by writers with academic and journalistic backgrounds. Kaashif and I used to work at our college’s writing center together. And Joe consciously chooses to hire people with backgrounds similar to his own:

“I think a big part of my ability to leave that impression originates from my research and writing capabilities. And I honed those abilities as a journalist and academic. That's why I hire writers with those backgrounds. I'm in search of writers who will really wow my clients with their writerly rigor, voice, accuracy, and research.”

Years of training to understand effective written communication and critical thinking are essential for content marketers.

You cannot convince people to trust you with their money if you don’t know how to write about what you do or why they should care. And if everyone has already written about the same tactics for building an SEO strategy, your post with those claims won't actually help or inform any readers.

Why would someone read your dull, unoriginal post instead of somebody else's?

Why do you want to just publish something for the sake of publishing something, rather than publishing something you know to be useful & good? (This is not unique to LLM/AI NLG, it's the same with unknown-quality human-written content.) What do you want your site known for?

— johnmu is not a chatbot yet 🐀 (@JohnMu) February 24, 2023

How We Do Our Content Marketing Work

Good content, like any good marketing, is contextual.

As I described in this short video, the effectiveness of your marketing depends on your business, your audience, the current market, and all other external factors influencing buyer expectations:

We deliver results for our clients by tuning into those specifics and diving into the worlds of their customers and potential buyers. Our engagements are often wide-ranging with activities like interviewing internal stakeholders, looking for external experts, browsing industry forums, reading hundreds of pages of industry publications, hunting down recent academic research, and dissecting memes.

We make good content because we treat every client and every audience as special and unique. When we take the time to understand all of the specifics that make a particular audience tick, that’s when we can determine both an effective content strategy and the appropriate tactics to implement it.

You cannot reliably generate results from your marketing tactics without a deep understanding of the “why” behind the “how” of your campaigns. As Rand Fishkin wrote recently in “Too Few Marketers Grasp the Difference Between Strategy vs. Tactics”:

“’Why are we investing in this tactic?’ is a question every marketer should be able to answer with clarity. If you struggle to explain why your PPC efforts focus on a particular set of keywords or why your branding conveys a bright, vibrant color palette, or why your PR efforts target small creators and publications over big, legacy media, you’re in trouble. Your company probably is, too.”

Even if your tactics are currently working without defining a proper strategy, how will you adapt once the market changes? What will you base your new strategy on without having understood your previous success in the first place?

Why Generative AI Falls Short: 3 Key Problems with AI Writing Tools

As Philip Moscovitch wrote in his excellent piece on Jasper.ai, AI tools cannot understand proper context and tone. When he asked the AI to generate restaurant reviews with a “disgusted”, “angry” or a “delighted” tone, the results were very similar and continued focusing on the negatives of the hypothetical dining experience…. even when trying to sound positive.

Here are Philip’s own words:

"Professional reviewing — reviews written by people knowledgeable in their fields, who could provide context — has been essentially destroyed by user reviews, under the guise of democratization. Through our free labour, we have created value for sites by doing our own reviewing. But you can easily imagine a near-future in which most of the reviews and the responses to reviews on these sites and apps are written by bots."

In my opinion, these are the three key problems with using generative AI for content marketing:

Pattern matching algorithms can't create anything new

Focusing on quantity instead of quality is a race to the bottom

Generative AI doesn’t understand logic.

Let’s take a closer look.

Pattern Matching Algorithms Can't Create Anything New

In “On the Danger of Stochastic Parrots”, Emily M. Bender, Timnit Gebru, Angelina McMillan-Major and "Shmargaret Shmitchell", explain that a large language model (like GPT-3) is:

"a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot."

Basically, AI looks at a big set of data and notices when certain characteristics (words, visuals, etc.) tend to appear together. It's operating on correlation without any concept of meaning.

AI’s "parroting" isn't the same as human creativity. Humans think with intention and can contextualize the information that they are exposed to. These AI models can't do that. As they get better, they might have bigger datasets or gain the ability to "remember" more words, but even taken to an extreme, this technology will never understand meaning.

Because I’ve seen marketers misunderstand this fundamental mechanism powering generative AI, here’s another explanation in slightly different terms. As Murray Shanahan from Imperial College London described in “Talking About Large Language Models”:

“LLMs are generative mathematical models of the statistical distribution of tokens in the vast public corpus of human-generated text, where the tokens in question include words, parts of words, or individual characters including punctuation marks. They are generative because we can sample from them, which means we can ask them questions. But the questions are of the following very specific kind. ‘Here's a fragment of text. Tell me how this fragment might go on. According to your model of the statistics of human language, what words are likely to come next?’”

In other words, the way in which an AI like ChatGPT interprets language is much more limited in scope than you might imagine looking at its output. There is no space for nuance, intention, or subjectivity. The AI is throwing darts at a wall without any eyes: even if some attempts hit the bullseye, many others will miss the mark completely.

Because an LLM doesn’t understand meaning, it cannot create helpful, useful, or relevant content for your business. Niki Grant explained the problem quite well:

“What a user finds ‘helpful’ is subjective and nuanced. [..] An AI tool will not consider or address this nuance, which may result in brands creating a volume of ‘same-y’, bland, tone-deaf content, which could do more to hinder than help not only a site’s presence in the SERPs, but its reputation amongst consumers.”

Side Note: If you’d like to understand how ChatGPT actually works, this 18,000+ word breakdown by Stephen Wolfram should tell you everything you need to know.

Focusing on Quantity Instead of Quality is a Race to the Bottom

I don’t need to convince you too much: 83% of B2B marketers already understand that quality of content is a key differentiator.

Graph from Content Marketing Institute’s “13th Annual Content Marketing Survey”.

I've made my entire career so far about preaching that quality is more important than quantity. That’s why I am so confused by all of the enthusiasm around AI’s promise to help us make content faster. Why do we want to make stuff faster, exactly? What's the point?

When you measure marketing success based on quantity of output, you will never see good business results.

Too Much Content is Spam By Another Name

There are few reasons for any company to publish 20+ blog posts a month, and for smaller teams, the amount of content they can produce without sacrificing quality is much lower.

Sooner or later, a company pumping out too much content will either run out of unique ideas or their writers will burn out and begin producing generic garbage.

If 10 CRM companies publish 40 blogs a day for 1 year, that's 146,000 blog posts. How many unique topics can these companies possibly cover? We would probably end up with 10,000+ pieces on how CRM software can help businesses "improve retention" and "consolidate interactions".

Who will read these? What purpose can these posts possibly serve?

Even if those articles somehow continue to rank well on Google Search, why would any human reader actually click on any particular one?

You basically end up playing roulette with all of your other competitors, praying that you will get ahead based on nothing besides pure chance. At best, you're competing for the best keyword-stuffing (where “best” gets defined based on whatever the current algorithm thinks, not what your human audience might like).

I already tell my clients and prospects that they are trying to publish too much content more often than not. When you take this tendency to the scale that AI-generated content can provide, the pool of mediocre content becomes an ocean.

We already have a word for pumping out large quantities of internet content without concern for quality or utility - spam. Just look at this screenshot from Google’s List of AI Content Guidelines:

“Using automation—including AI—to generate content with the primary purpose of manipulating ranking in search results is a violation of our spam policies.” - Via Google’s guidance on AI Content.

The same problem appears in the industries that we marketers get our sources from. For instance, the scientific community is already flooded with low-quality papers as you can see from this LinkedIn post by Dr. Jeffrey Funk:

“We don’t need more papers, we need a smaller number of high quality papers. [..] These papers should be worth reading by thousands of scientists and engineers both inside and outside academia. A significant fraction of these papers should lead to some changes in the ways society does things.”

Publish Less Content, But Make It Good

We already struggle more with finding quality information out there.

Creating more lackluster content is not a real problem. Locating authoritative and trustworthy sources is the problem. Edelman’s 2021 Trust Barometer showed that 56% of respondents believe that “business leaders are purposely trying to mislead people by saying things they know are false or gross exaggerations”. Yet I see businesses bragging on LinkedIn every single day about all the content they created with ChatGPT in 10 minutes.

By speeding up your content production to an extreme, you’re avoiding the real hard work of building trust with your customers, partners, and investors. How can you build thought leadership if you aren’t even putting your own thoughts out on paper but outsourcing them to the tools that can produce content the fastest?

Most of our clients don’t actually need to publish the type of volume that AI is supposed to help with. If you’re a smaller brand and you’re putting out only a couple of pieces a month, AI is not saving you that much time. As Wil Reynolds put it on Seer Interactive’s blog:

“If you only need to make (or have budget to make) 20 pieces of content this year, then maybe all this AI stuff is overblown. (As long as you aren’t making 20 pieces of content easily answered by AI). However, if you see the need to make 100 or 200, then maybe you do need more assistance to scale the work of the team.”

Marketers and clients in the B2B world do want quality content. But sometimes I wonder if they truly understand what “quality content” means.

When you actually get into these generative AI tools and learn to write a prompt, you hit a wall pretty quickly. No matter how good your prompt is… it’s very difficult to improve the AI’s output beyond the initially impressive paragraphs that they produce on first try.

Example - Comparing ChatGPT’s Output to Our Human Writing

Let me show you what I mean.

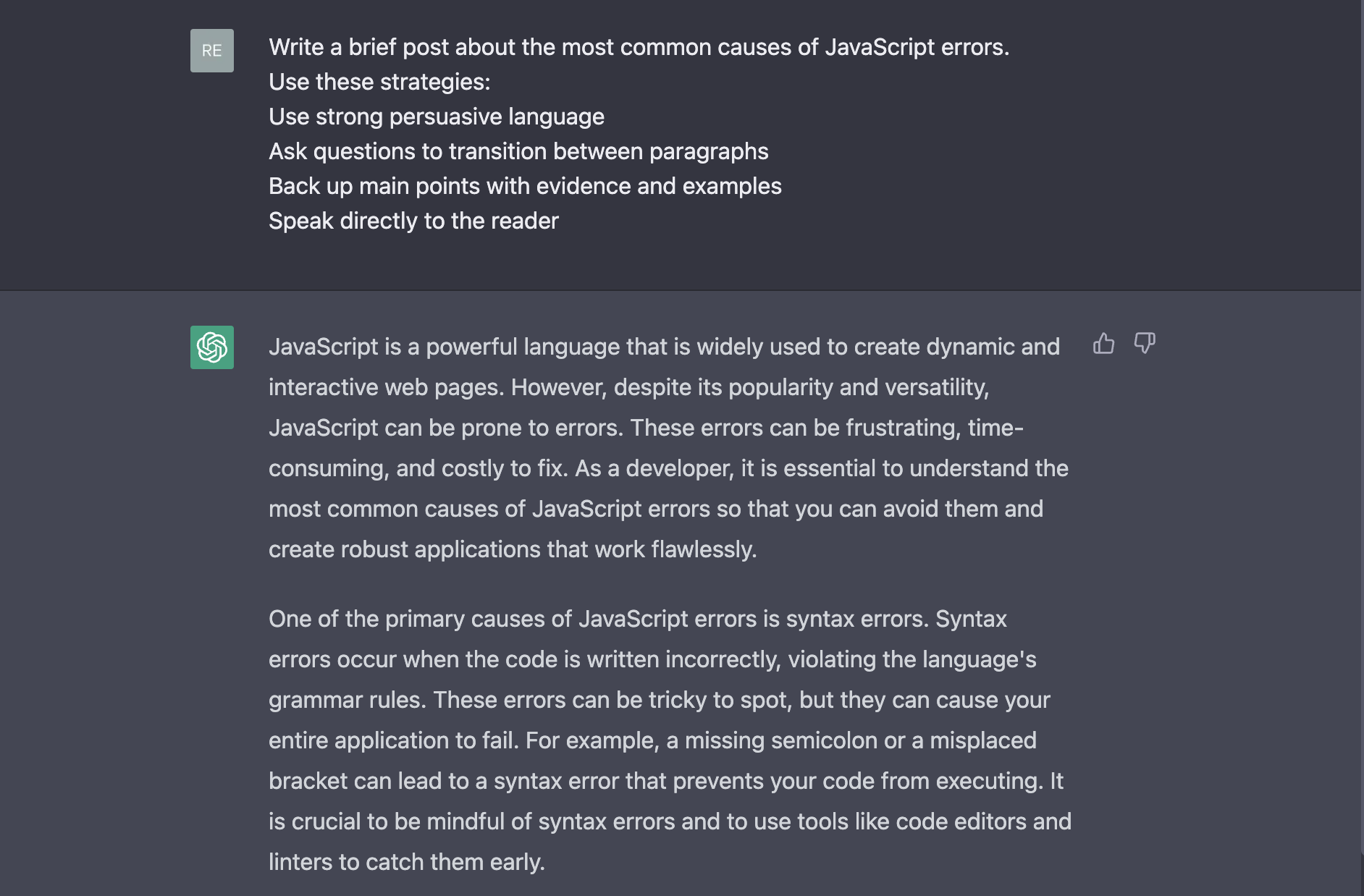

Let’s take a prompt that has been curated and optimized for quality output from ChatGPT. I’ve decided to use one of the prompts recommended in Rob Lennon’s Twitter thread. This thread got 7.1M views and 48,000 likes, so we can assume it’s a fair shorthand for “best practices” in prompt writing.

Prompt Template

Write a brief post about [Topic]. Use these strategies: Use strong persuasive language Ask questions to transition between paragraphs Back up main points with evidence and examples Speak directly to the reader

Topic

To make this comparison more entertaining, I’ve chosen a topic that we have explicitly written on for a past client: common causes of errors in JavaScript code.

ChatGPT’s Result

In classic ChatGPT style, the introduction is vague, generic, and condescending. If I am learning about a specific topic within JavaScript programming, I don’t need to be told what JavaScript is. If I am a developer, I would feel whiplash at some random blog post telling me that it’s essential for me to understand this topic so that I can “create robust applications that work flawlessly”. (And flawless applications aren’t a thing, as any developer will confess to you after a couple of beers. All code is basically held together by elaborate levels of duct-tape and needs regular maintenance, bug fixes, and updates).

The main causes of errors that ChatGPT listed are also familiar. Syntax errors, incorrect variable declarations, and failing to implement error handling for asynchronous code - all of these are very basic types of mistakes and mostly not even unique to JavaScript. ChatGPT failed to include specifics to this coding language, show practical examples, or even say anything particularly helpful.

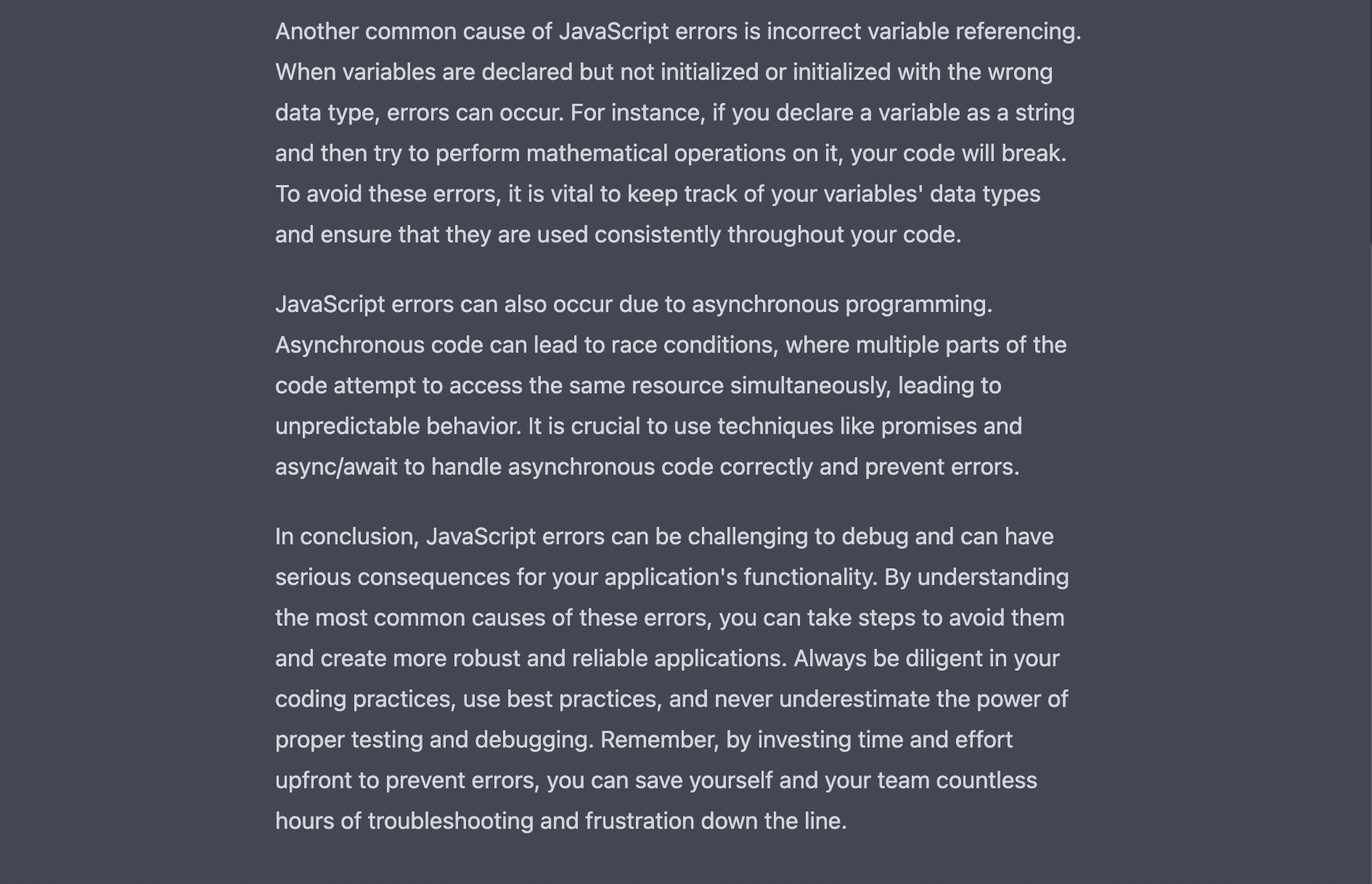

Our Article

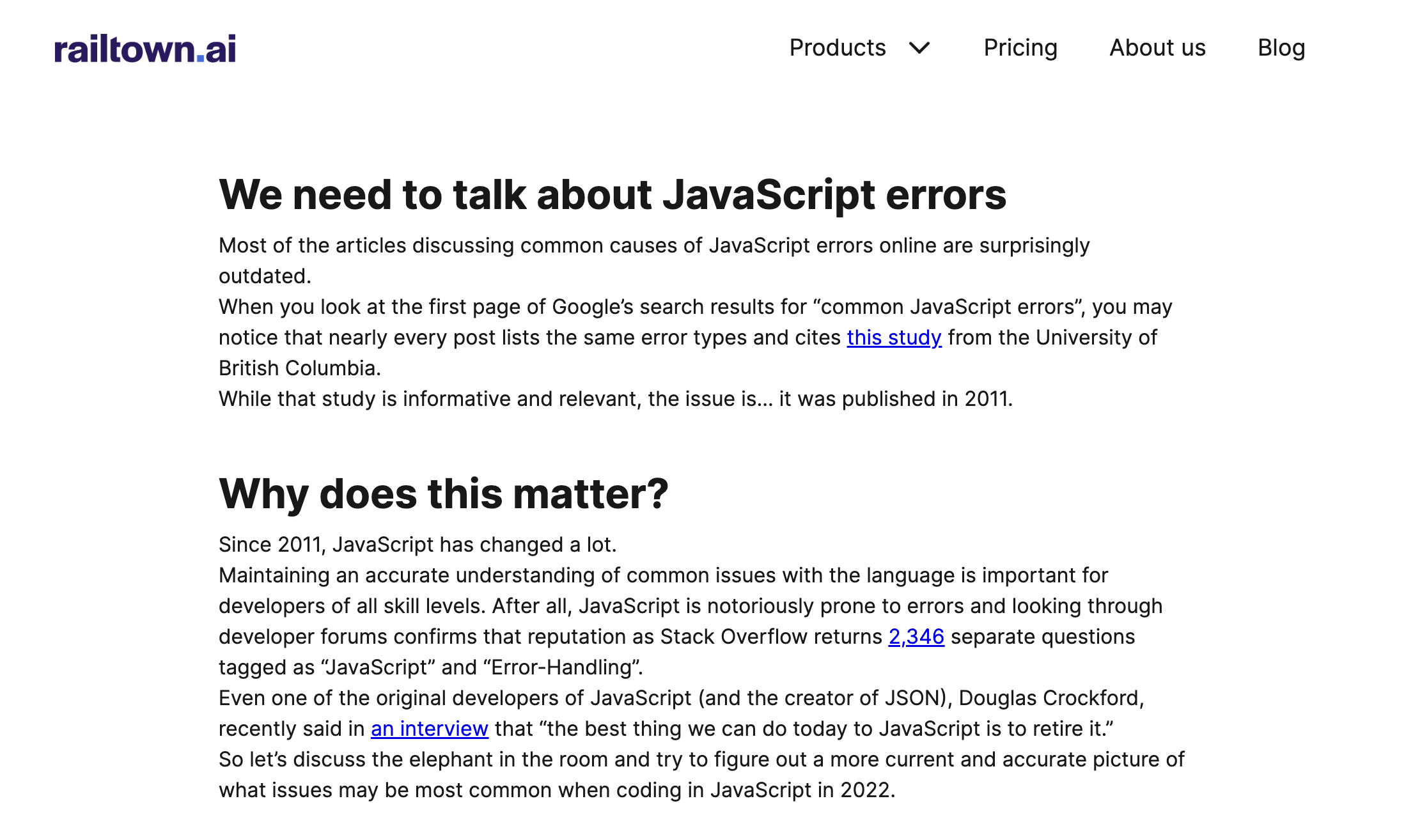

Now, here’s a snippet from an article that I helped create on the same topic. This isn’t a particularly standout piece, we didn’t do any original research or conduct interviews with subject matter experts (SMEs). This was a relatively simple blog post, commissioned on a tight deadline. Nothing groundbreaking.

But let’s see if you notice the differences between what our team produced and ChatGPT’s earlier output:

In the introduction, we immediately tell the reader why they should be reading our piece. We know that the reader has heard of JavaScript. We address the existence of other pieces on the topic, and we make the case as to why our piece is valuable.

Then, we explain all of the practical applications of the academic paper we’re using as our primary source. We group all of the errors into buckets and explain how common they are and what makes them different.

Throughout the piece we are short, to the point, and we are respecting the time and expertise of our hypothetical readers. This piece is written to sound like one developer speaking to another.

To create that article, I spent 5+ hours simply hunting down a reliable and updated review of common errors. This meant crawling through pages and pages of Reddit and StackOverflow threads, ending up on page 9 of Google Scholar, plugging in library databases, and reviewing slide decks for industry conferences.

Sure, I don’t know how to code in JavaScript and ChatGPT technically does. But the article I worked on sounds a lot more trustworthy and empathetic than the AI-generated Wikipedia knock-off.

Generative AI Doesn’t Distinguish Fact from Fiction

Pattern-Matching Does Not Equal Understanding

As Philip Moscovitch put it, "the AI will happily just make sh*t up" (censorship mine).

Within all the hype, we’ve already seen multiple news stories on how AI continues to misunderstand and misquote claims. The supposed search engine of the future unveiled by Microsoft, has produced so many errors that there are roundups like “Bing AI Can’t Be Trusted” on the DKB Blog.

As I explained above, I’m not surprised that pattern-matching algorithms cannot understand the subtlety of complex arguments. AI’s writing reminds me of high school essays or the student writing I saw while working as a writing instructor in college: copying words that they’ve heard from their teacher without putting in the effort to actually understand what those words mean.

In fact, I can prove this to you.

A couple of months ago, I published a LinkedIn post where I argued that artificial intelligence doesn’t equal artificial expertise. My conclusion was based on a small experiment that I ran over the summer, where I asked a GPT-3 based AI tool and my teenage brother to write blog post intros based on the exact same information. In fact, you can still take the quiz on our site.

Based on those survey results as of November 2022, most people could not distinguish what was written by a high school student with 6 minutes per question versus the expensive AI tool branded for professional marketers. You can see our full results below:

Survey results from our “AI vs. High School Student” quiz.

When given a cookie-cutter business blog topic, AI is excellent at writing like humans… just not the humans you’d want handling your blog.

AI’s Arguments Are Shallow And Boring

ChatGPT’s output makes me think of this illustration from the excellent academic writing book “They Say / I Say”:

“Writing a good summary means not just representing an author’s view accurately, but doing so in a way that fits what you want to say, the larger point you want to make.” - from “They Say / I Say”.

AI is a very lazy writer. Because it doesn’t understand meaning, it can only recount one statement after another with no coherent larger view or argument behind any of its claims. ChatGPT doesn’t feel, so it cannot embed its writing with any type of emotional purpose. Writing without emotion or personality is… boring. I feel my eyes closing whenever I have to look through AI output for more than 10 minutes.

Let's put it this way: AI output never includes anything beyond those really, really shallow arguments that you will find in the worst results from the first page of Google. At best, ChatGPT sounds like the first 30 minutes of an Intro 101 level course that you took in college. If you try to build any ideas beyond that, these tools don't get it.

Generative AI cannot understand what it’s talking about. The AI doesn't know how one thing connects to another and the correct logical relationships between the different elements within any topic. How could you ever build a proper argument out of such a faulty foundation?

Hallucinations and Lies of Generative AI

Because AI cannot understand what it's talking about, depending on it creates another problem: the AI will make up facts and lie to you.

The most insidious part of these frequent inaccuracies is their appearance of truth. ChatGPT's writing seems believable. It gets things right about 80% of the time on most topics. But what happens with the other 20%?

Search engines already had trouble parsing information without AI, as you can see in this entertaining example of Bing answering “what’s the human population on Mars” with “Ten billion”.

In our desperate race to save time while creating content, how many teams will actually take the time to properly question and cross-reference every single claim that the AI makes? We’re already tired, overburdened, and stretched too thin. I simply don’t believe that every single marketer will continue to do full and comprehensive due diligence while ramping up their content production.

And if we unleash this level of misinformation into our branded content… why would any customer ever trust us with their money again?

But What About Results?

I can already hear some of you saying: “But Mariya, what if I don’t care about good writing or the process? I only care about whatever is going to bring results for my business, whether created by ChatGPT or a human.”

Let me spell this out as clearly as I can:

Until AI takes over all buying decisions for businesses and consumers around the world, marketers need to influence human behavior. Humans make purchasing decisions based on emotional factors. Even if you manage to trick some buyers with AI content, that won’t last forever. Humans don’t buy things that they don’t want to buy. And if you force someone into paying you out of necessity or a lack of alternatives… unless they genuinely like your company, they will churn and leave you for another provider as soon as they show up. Marketing operates on getting human being to like and trust you. Gimmicks will always run out.

I understand that results are important. What I’m arguing in this post isn’t that we should ditch all thoughts of potential ROI and financial benefits to leveraging AI for content marketing. Instead, I am showing you why generative AI, in its current state, will not get your marketing campaigns better results than qualified human experts.

Final Thoughts: AI Won’t Steal Your Job

Even if you want it to happen, AI won’t take your job.

My prediction is that ChatGPT and generative AI will proceed along the same path as many other hype cycles: rapid adoption, constant discussion, slow disappointment, and decline.

As more marketing teams try to implement AI into their workflows, they will discover all the flaws inherent to that technology. And once enough annoyances pile up and the novelty fades… those ChatGPT prompts will be left gathering metaphorical dust in a forgotten folder of somebody’s computer or Google Drive.

Sure, some companies are implementing AI instead of hiring human experts. As marketers, we need to justify our work against all this glamorous hype. Joe Zappa views our task this way:

“Many businesses will cut human writers and use AI instead. To earn our part of the marketing budget, we writers need to be a lot better than AI. Some people will never recognize the difference. But many will, and for those prospective clients, it's essential that we demonstrate our ability to strategize with them and make differentiated arguments on their behalf that transcend the commoditized content at which AI excels.”

I am not particularly worried for the future of Kalyna Marketing or for Joe’s content agency. Those of us who already think deeply through our work, take the time to understand our clients and the markets they operate in, and embed personality into our content – we will be fine. ChatGPT cannot replicate a real point of view, and I don’t see that changing anytime soon.

If you enjoyed my thoughts on AI and quality content, check out my YouTube video on the same topic.